AI Compliance in Pharma: Regulatory Risks, Monitoring Frameworks, and Governance Models

Prevent risky AI outputs before they ship. Set up continuous compliance monitoring with guardrails, audit trails, and real-time exception handling.

The pharmaceutical industry is currently navigating a pivotal transformation driven by the collision of two powerful forces: the exponential adoption of Generative Artificial Intelligence (GenAI) and an increasingly rigorous regulatory enforcement landscape targeting digital communications. As pharmaceutical companies deploy Large Language Models (LLMs) to power sales team co-pilots and healthcare professional (HCP) facing chatbots, they confront a critical operational paradox. The stochastic nature of GenAI, its ability to generate novel and probabilistic responses, conflicts directly with the deterministic rigidity of pharmaceutical compliance, where every claim must be substantiated, every risk disclosed, and every adverse event (AE) reported.

This report provides an exhaustive analysis of the emerging market for AI Output Compliance Monitoring, a specialized technology segment dedicated to the real-time surveillance, auditing, and governance of AI systems within the life sciences sector. The analysis focuses specifically on two high-stakes use cases: Sales Team Chatbots (internal tools assisting representatives with pre-call planning and content retrieval) and HCP Chatbots (external interfaces on drug websites providing medical information).

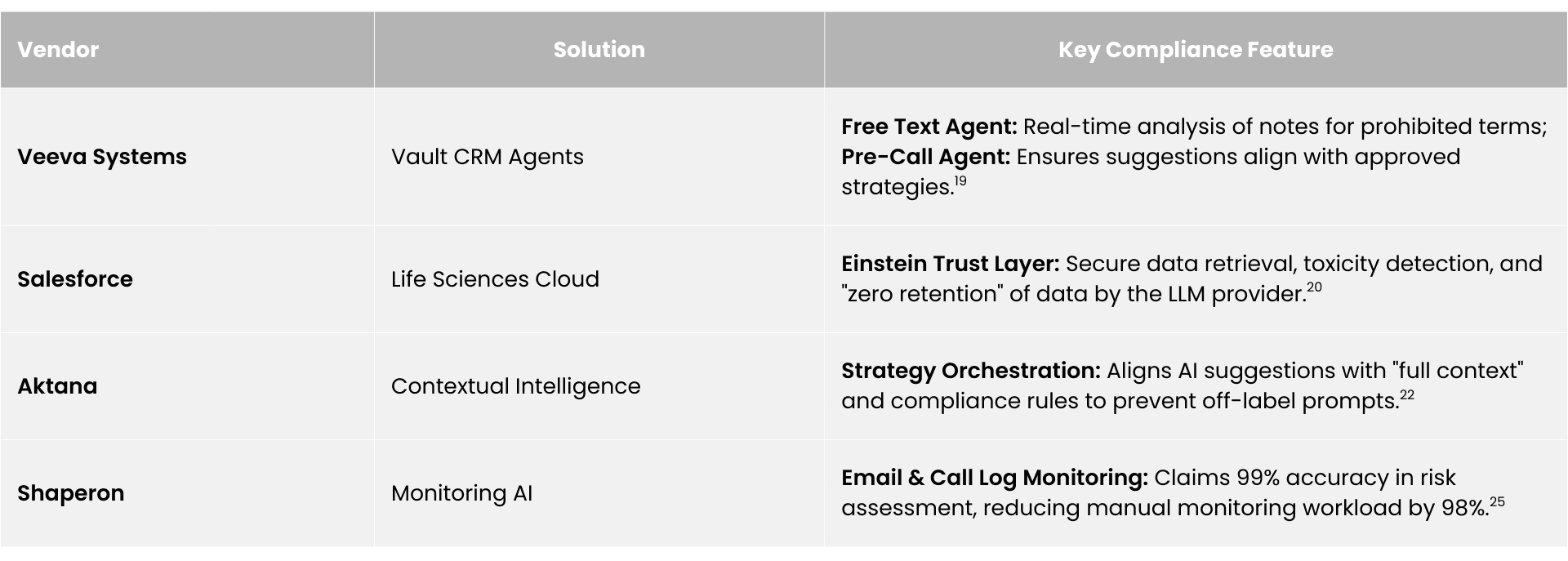

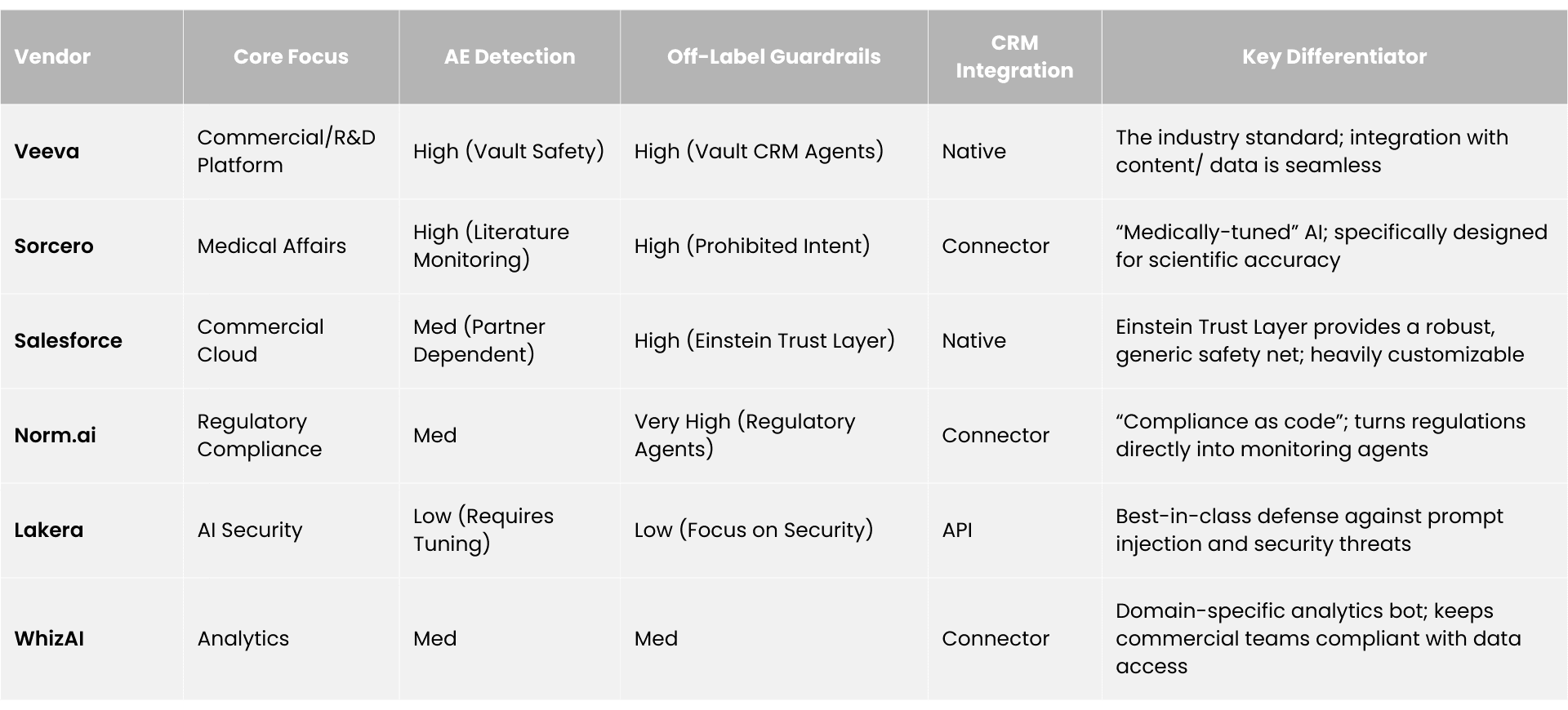

Our research indicates that the market is rapidly bifurcating into a multi-layered ecosystem. At the infrastructure level, "Guardrail" providers like Lakera and NVIDIA NeMo offer horizontal security against prompt injection and data leakage. At the application layer, vertical specialists like Sorcero, Norm.ai, and WhizAI are deploying "Semantic Compliance" agents capable of interpreting complex biomedical context, detecting off-label intent, and automating pharmacovigilance intake. Meanwhile, platform incumbents like Veeva Systems and Salesforce are embedding "Agentic AI" directly into the workflow, aiming to commoditize compliance monitoring as a native feature of the CRM.

The drivers for this market are existential. With the FDA’s Office of Prescription Drug Promotion (OPDP) signaling a return to aggressive enforcement and leveraging its own AI tools to surveil the digital landscape, pharmaceutical companies are compelled to adopt “AI to watch AI.” The cost of non-compliance, ranging from warning letters to massive civil liabilities for hallucinated medical advice, far outweighs the investment in monitoring infrastructure. This report projects that by 2026, real-time AI compliance monitoring will transition from a best practice to a mandatory component of the pharmaceutical tech stack, fundamentally altering the operating model of Commercial and Medical Affairs organizations.

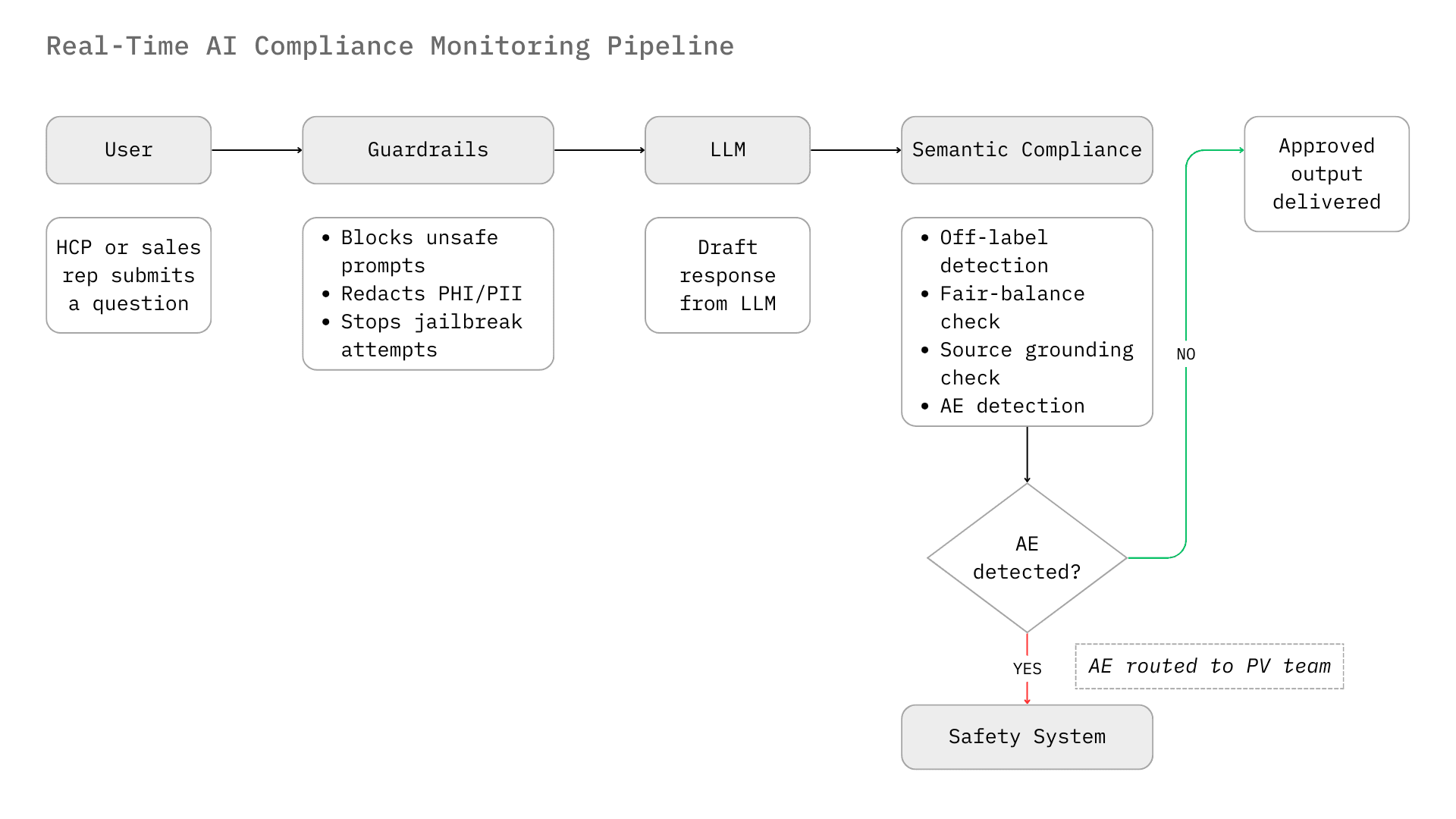

The deployment of conversational AI in life sciences represents a fundamental shift in the enterprise risk surface. Traditional digital assets, such as static websites, detail aids, and email templates, are deterministic. They undergo rigorous Medical, Legal, and Regulatory (MLR) review before dissemination. Chatbots and conversational agents, however, are dynamic. They generate responses probabilistically in real time, effectively bypassing the pre-approval containment mechanisms that have defined pharma compliance for decades. This shift necessitates a move from pre-approval review to real-time monitoring.

The FDA's Office of Prescription Drug Promotion (OPDP) is the primary arbiter of truthful and non-misleading promotion in the United States. The integration of AI chatbots introduces a unique liability: hallucination-driven misbranding. If an HCP chatbot on a drug website, driven by an LLM, fabricates a clinical study result or suggests efficacy in an unapproved indication (off-label), the manufacturer is strictly liable for misbranding under the Federal Food, Drug, and Cosmetic Act (FD&C Act).¹

Recent enforcement trends indicate that the FDA is modernizing its oversight capabilities to match the industry's technological adoption. The agency has explicitly committed to leveraging "AI and other tech-enabled tools" to proactively surveil promotional activities, with a specific focus on "AI-generated health content and chatbot interactions".¹ This development creates a technological arms race; regulators are using AI to detect violations, compelling pharmaceutical companies to deploy superior AI compliance monitoring to prevent them.

The FDA's focus has expanded to "closing digital loopholes," explicitly mentioning algorithm-driven targeted advertising and chatbot interactions as areas of renewed scrutiny.² This is not a theoretical risk; in September 2025, the FDA issued a flurry of enforcement letters, signaling a departure from the "overly cautious approach" of previous years and a return to the aggressive enforcement paradigms of the late 1990s.³ The agency's use of AI to scan vast troves of digital content means that the probability of detection for non-compliant chatbot outputs is approaching 100%.

In traditional media, "fair balance" (the presentation of risk information comparable to benefit claims) is structural. A print ad has a "brief summary" page; a TV spot has a rolling "major statement." In a chatbot conversation, fair balance must be temporal and contextual.

If a sales rep's internal chatbot suggests a talking point about efficacy, it must simultaneously surface the relevant safety warnings. If an HCP chatbot answers a query about dosage, it cannot omit contraindications. Compliance monitoring systems for these interfaces must possess Contextual Awareness. They cannot simply scan for keywords; they must evaluate the gestalt of the conversation to ensure that the risk profile is presented with "equal prominence" to the benefit profile.³

The complexity of this task is compounded by the "Clear, Conspicuous, and Neutral" (CCN) standards finalized by the FDA in 2023.¹ A chatbot response that buries risk information in a dense paragraph or a hyperlink may fail the CCN standard. The monitoring AI must assess the readability and prominence of the safety information within the chat stream, ensuring that the "major statement" is not just present, but effectively communicated. The FDA's move to eliminate the "adequate provision loophole"—which previously allowed broadcast ads to reference a website for detailed risks—suggests that chatbots will be held to a standard where safety information must be integral to the interaction, not offloaded to a secondary source.²

Perhaps the most critical operational risk in pharma AI deployment is the detection of Adverse Events (AEs). Pharmaceutical companies are legally mandated to report AEs to health authorities (FDA, EMA, PMDA) within strict timelines (e.g., 15 days for serious, unexpected events).⁴

Chatbots interacting with HCPs or patients inevitably solicit health data. A patient might type, "I took your drug and felt dizzy." A standard LLM might respond empathetically. A compliant system must recognize "dizziness" as a potential AE, classify it, capture the reporter's details, and route the unstructured text to the safety database (e.g., Argus, ArisG) for processing.⁵

Failure to capture an AE mentioned in a chatbot conversation constitutes a significant compliance violation. The market for AI monitoring in this sector is driven by the need to automate this "intake and triage" process. Manual review of every chat log is economically unfeasible given the volume of interactions. Therefore, companies require AI monitoring solutions with high sensitivity (recall) to ensure that no potential safety signal is missed.⁷ This is distinct from standard "toxicity" monitoring; an AE description is often benign in tone but critical in regulatory weight.

The pharmaceutical industry harbors a profound distrust of "Black Box" AI. A recent survey revealed that 65% of pharma marketers distrust AI for creating regulatory submissions due to concerns over hallucinations and lack of traceability.⁹ This sentiment drives the technical requirements for compliance monitoring systems. They cannot merely be filters; they must be auditable systems of record.

Monitoring systems must provide a "Glass Box" view. It is not enough to block a response; the system must log why it was blocked, citing the specific business rule or regulatory statute. This creates an immutable audit trail necessary for responding to FDA inquiries or Department of Justice (DOJ) investigations.¹⁰ The demand is for "Explainable AI" (XAI) that can justify its decisions—e.g., "Blocked response because it implied efficacy in a pediatric population, which contradicts Section 4.1 of the USPI."

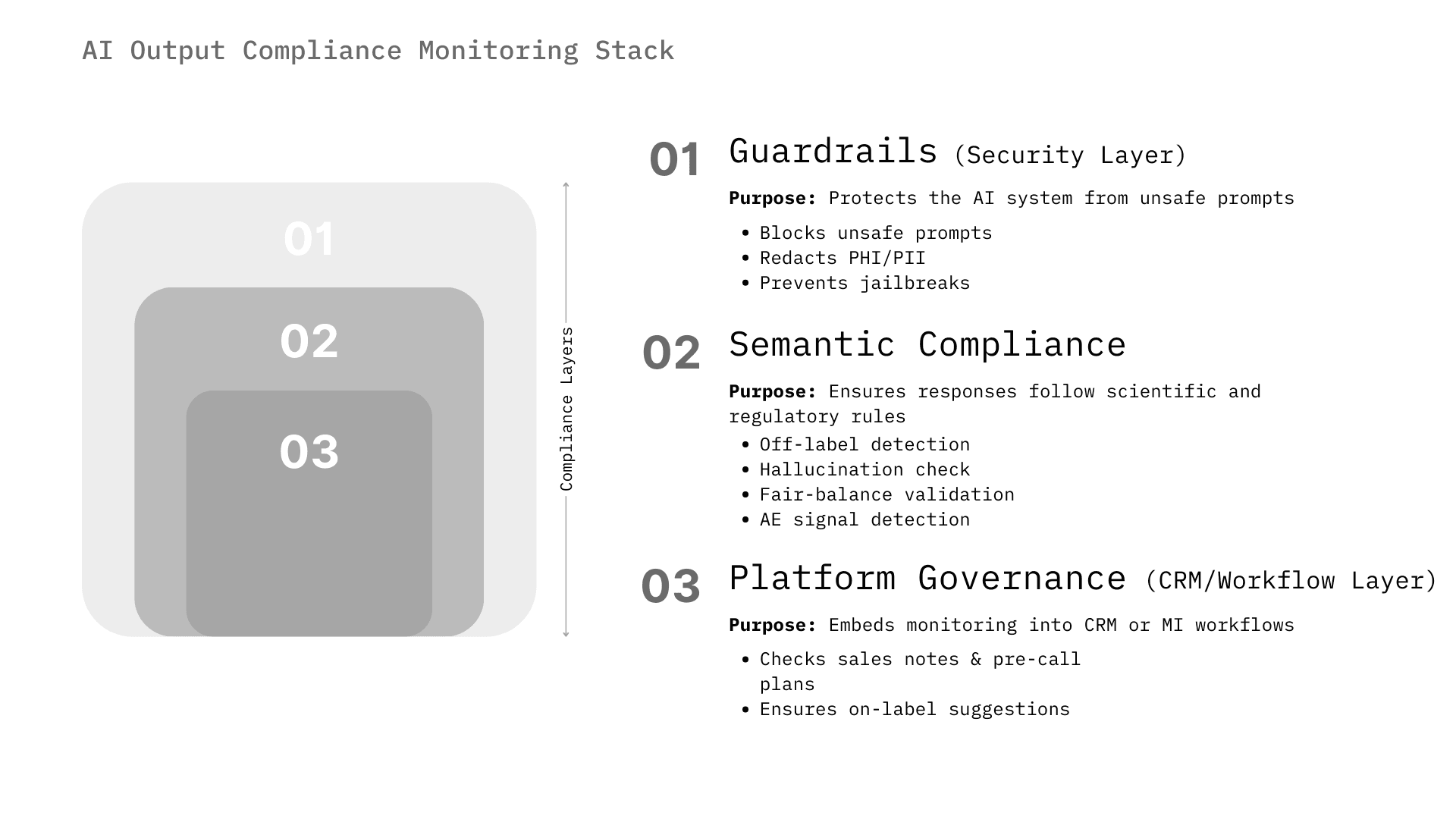

The market for AI compliance monitoring is not monolithic. It spans from infrastructure-level security (preventing prompt injection) to high-level semantic analysis (detecting off-label intent). We categorize the solutions into three distinct architectural layers that companies are assembling to create a "defense-in-depth" strategy.

This layer operates at the point of inference. It intercepts the user's prompt before it reaches the LLM and intercepts the LLM's response before it reaches the user. It is the first line of defense, focused on security and basic safety.

This is the highest value segment for pharma. These systems run in parallel or post-hoc to analyze the content of the interaction against specific regulatory frameworks (e.g., 21 CFR Part 202, PhRMA Code).

Major life sciences platforms are embedding compliance monitoring directly into their CRMs and content management systems, viewing compliance as a feature rather than a separate product.

Pharmaceutical sales representatives are increasingly supported by AI co-pilots, which are chatbots integrated into CRM systems that help reps plan calls, summarize interactions, and retrieve approved messaging. The primary objective here is Commercial Effectiveness, but the primary constraint is Compliance.

Historically, pharma companies have restricted sales reps from entering free-text notes in CRMs to avoid the risk of recording off-label discussions or unverified claims, which could be discoverable during litigation. Reps were forced to use "drop-down" menus, limiting the richness of data captured.

The AI Solution: AI compliance monitoring is unlocking the "free text" capability.

Market Insight: The market for monitoring sales chatbots is essentially a market for enabling sales intelligence. Compliance monitoring is the "license to operate" for generative AI in the field. Without the ability to monitor and sanitize inputs/outputs in real-time, legal teams will not approve the deployment of GenAI sales assistants.

Sales chatbots often generate "Pre-Call Plans" suggesting what a rep should discuss with a doctor. This "Next Best Action" (NBA) capability is a core driver of sales efficiency.

HCP chatbots on drug websites (e.g., "Ask Pfizer" or brand-specific sites) serve as automated Medical Science Liaisons. They answer complex clinical queries, a function traditionally handled by call centers. The stakes here are even higher than in sales, as the information is directly clinical.

Unlike sales bots, HCP bots often need to discuss off-label information if it is in response to an unsolicited request (a "safe harbor" in many jurisdictions). However, distinguishing between a compliant "unsolicited request" and a bot-prompted "solicitation" is nuanced.

For HCP chatbots, the ability to recognize an Adverse Event (AE) is non-negotiable.

A compliant HCP chatbot must cite its sources. "Trust but verify" is the operating model.

While commercial compliance focuses on what is said, pharmacovigilance (PV) focuses on what is heard. The market for AI monitoring in PV is driven by the sheer volume of unstructured data generated by chatbots and social listening.

Traditional PV relies on human data entry professionals to read emails, listen to call recordings, and manually code AEs into databases like Argus. This process is slow, expensive, and error-prone.

The "Waldo" study ²⁷ provides empirical evidence that specialized, smaller models (SLMs) are superior to large generative models for compliance monitoring tasks like AE detection.

The market is stratified by the depth of pharmaceutical specialization. We identify four tiers of vendors competing for the compliance monitoring budget.

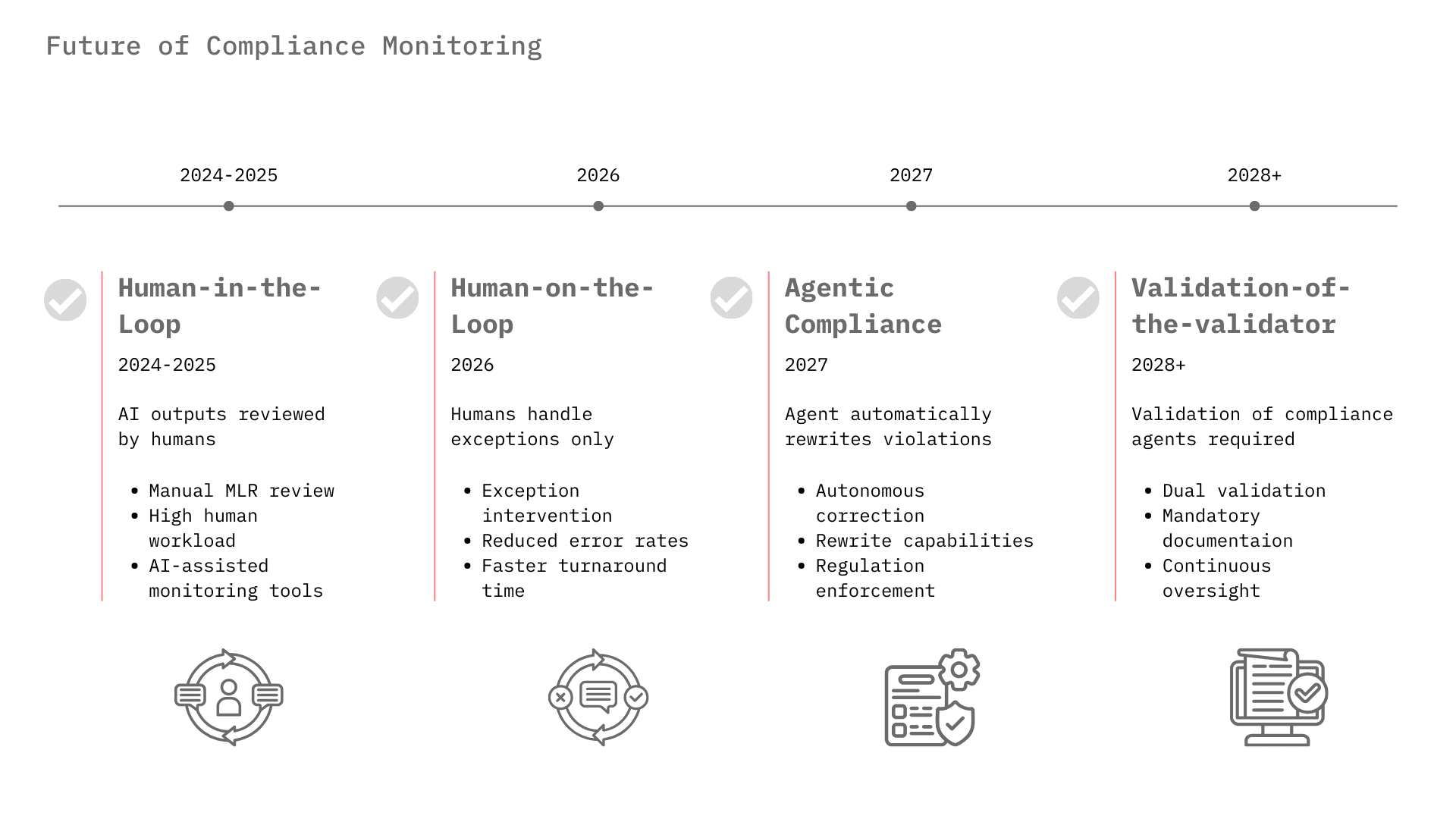

Currently, most pharma AI deployments require a human to review outputs, known as Human-in-the-Loop, due to trust issues. For example, MLR review of marketing materials is still heavily manual, though AI is beginning to pre-screen content.³² As compliance monitoring algorithms improve and demonstrate error rates lower than human reviewers, the industry will shift to Human-on-the-Loop, where the AI operates autonomously and humans manage only the exceptions flagged by the monitoring layer.

We are moving beyond passive monitoring to Agentic Compliance. In this model, a "Compliance Agent" doesn't just flag a violation; it fixes it. If a sales rep's draft email contains an off-label claim, the Compliance Agent will rewrite it to be compliant and present the revision for approval. Veeva's roadmap for "Free Text Agents" and Norm.ai's capabilities point directly to this future.¹⁰

As the EU AI Act comes into force (classifying some medical AI as "high risk") and the FDA finalizes its AI guidance, the "Compliance Monitoring" market will become a mandatory infrastructure layer, similar to cybersecurity. Companies will need to prove not just that their AI works, but that their monitoring of the AI works. This concept, "Validation of the Validator," will become a key requirement for GxP (Good Practice) compliance.

The market for AI output compliance monitoring in pharma is transitioning from a "nice-to-have" innovation project to a critical "license-to-operate" infrastructure. The proliferation of HCP and sales chatbots is currently throttled by compliance risks, specifically hallucination and off-label promotion.

For Sales Team Chatbots, the market is consolidating around platform-native solutions (Veeva, Salesforce) that integrate monitoring directly into the CRM workflow to enable capabilities like free-text capture. For HCP Chatbots, specifically in Medical Information, there is a vibrant market for specialized vendors (Sorcero, Eversana) that can guarantee scientific accuracy and adverse event detection.

Investors and stakeholders should prioritize technologies that offer Semantic Compliance, the ability to understand the meaning of regulations and apply them to conversation, over simple keyword filtering. The winners in this space will be those who can provide the Glass Box, which offers verifiable and audit-ready proof that the AI remained within the guardrails of the law. As pharma races to deploy the engine of Generative AI, the value of the brakes, the compliance monitoring layer, is skyrocketing.

Dive into our comprehensive collection of blogs covering diverse topics in project management and beyond.

Reach out to us for inquiries, support, or partnership opportunities. We're here to assist you!

Use our convenient contact form to reach out with questions, feedback, or collaboration inquiries.